summary — We introduce Pathfinder, a prototype aimed at exploring how language models can be used to allow the interface between networked applications to be defined less precisely. We present this as an early research demo hoping to make a first step towards an internet with such simplicity such that it may be maintained by individuals.

Documenting the Pathfinder Research Demo

Introduction

We've developed a new substrate hoping to explore how to simplify the communication requirements between participants in networked applications along with an app to program them.

Our overarching goal is to develop internet protocols that give users back control over their devices and digital presence. We believe that the mechanisms eroding users' freedoms today are largely economic, with the client/server architecture enabling the physical ownership of servers which in turn enables the network-effect-driven monopolies that can produce detrimental experiences. It is not that existing providers don't want to do better, it is instead that their hands are tight through the economic reality defined by the digital infrastructure they build upon. We hope to make the esoteric knowledge known by computer programmers accessible by regular people, so that they may interact with their devices as profoundly as developers can.

With this in mind, our primary objective for this release is to develop some ideas that can reduce how strict of an interface networked applications have to demand. The argument is that at every point where precision is required in the communication between computers, the communication must be implemented by a skilled developer. Each such communication point exponentially increases the complexity of the system and so one needs ever more skilled developers to tame the design. Hiring many developers to deal with all of this then inadvertently leads one to accidentally build a large corporation that suddenly needs to make a lot of money. We believe that addressing this is a first step towards building a collaborative internet that can be maintained by its individual users.

To make an example, let's say you have access to some underlying transport protocol that someone else maintains and you wish to implement simple direct messages. Classically, what every computer involved in the conversation needs to agree upon is the shape of the data payload sent. There is an interface between each chat participant and it needs to be precise: One user can't simply start sending messages with reactions, images, or games unless every client is upgraded with code to handle the new message type. This sort of precision is what we wish to eliminate. We want the data payloads sent in the example to just be "whatever" and the clients figure it out without any extra effort.

Please remember that this is an initial release of software which is very much in development, treat it as a minimum viable implementation of an idea, and expect there to be issues.

Showcase

Let's let the demo speak for itself with only a little bit of technical background.

Backend

The basic setup1 is that each user keeps track of a virtual append-only "file system". The user interface then is a function of that data. Networked applications are simply realized by the foreign computer writing some data into a user's file system. The file tree needs a little bit of order, so we introduce protocols, which are a set of valid paths and the constraint that all data needs to be written to the leaves of the tree. For instance, the "directMessages" protocol is:

directMessages

/conversations&/{sender}+

There is only one valid path, directMessages/conversations/{sender}, where the & symbol means everyone can write (or descent) through the folder and + means only if the logged-in user's id matches the folder name can they write to it. The {sender} is simply a variable, sort of like an HTTP path. Remember, the constraint is that data can only be written to the leaves of the tree, so to send a text message you write some payload to directMessages/conversations/your_id/msg_hash. On the other side, the recipient simply reads everything directly below directMessages/conversations to get all the people they've talked to and query any particular user id folder to get the conversation contents. This is different from a real file system in that all messages are sorted, so a query always returns the most recent last, and it is transactional with ACID compliance.

As part of its initial research demo nature, this is all centralized.

The Graph

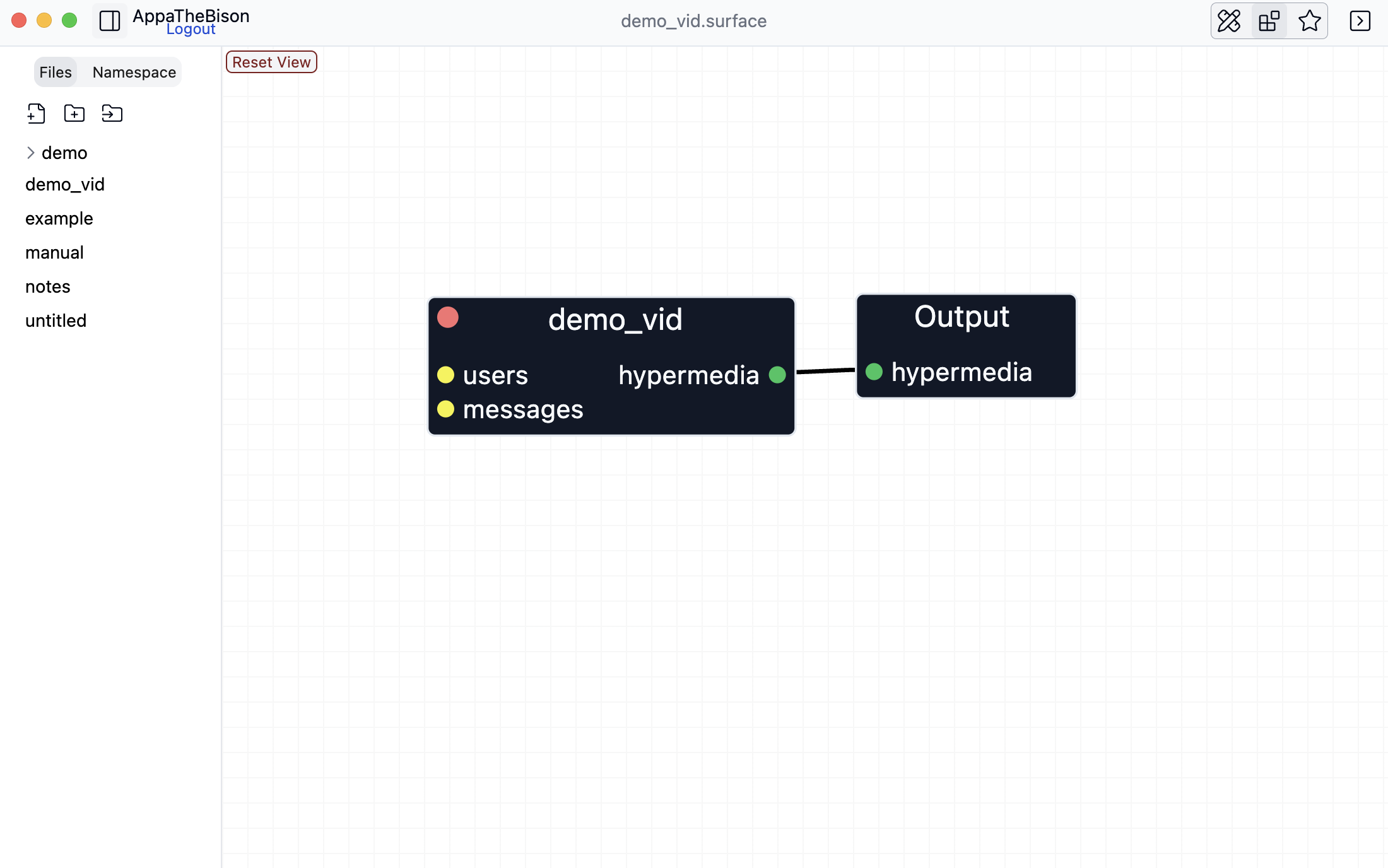

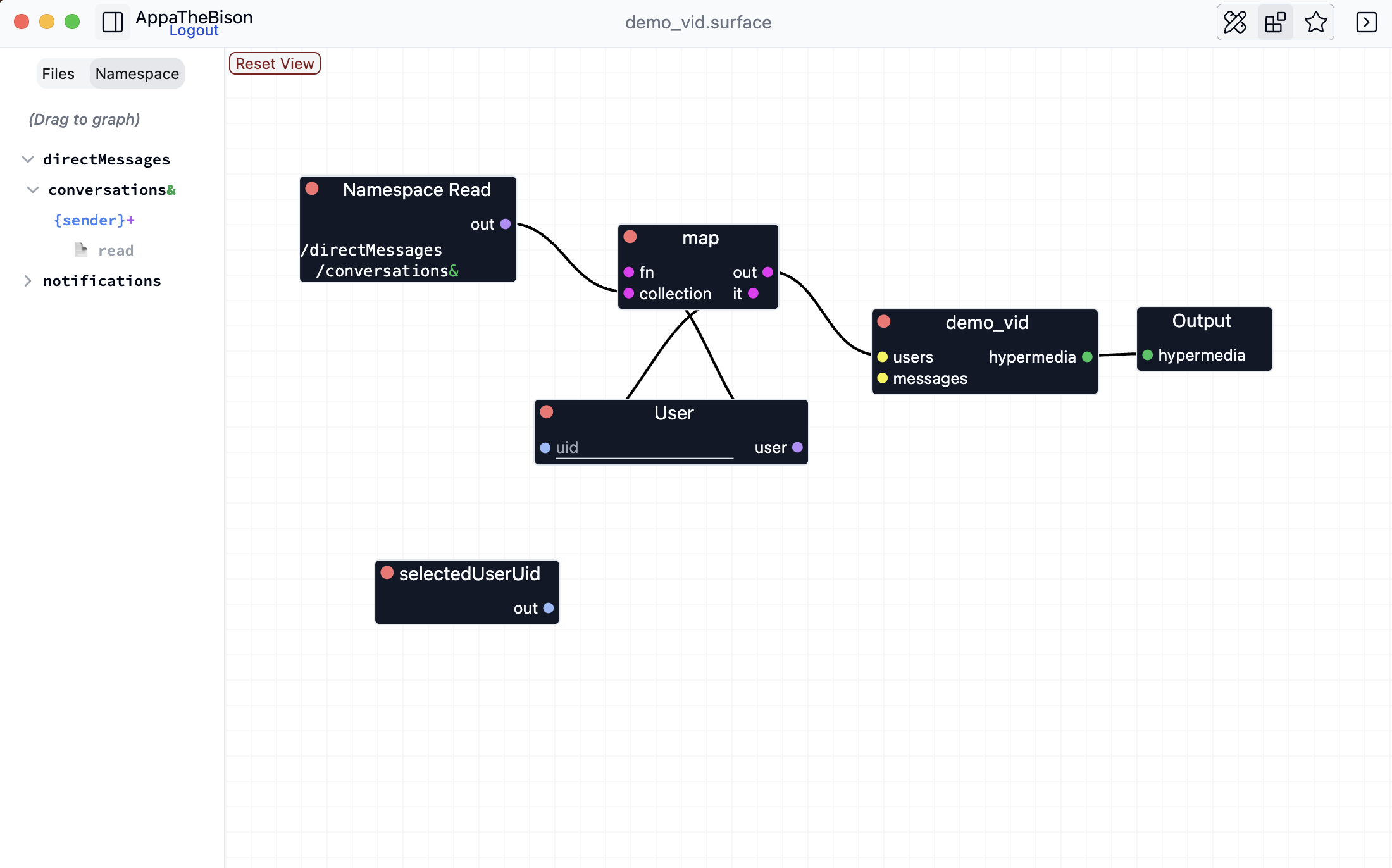

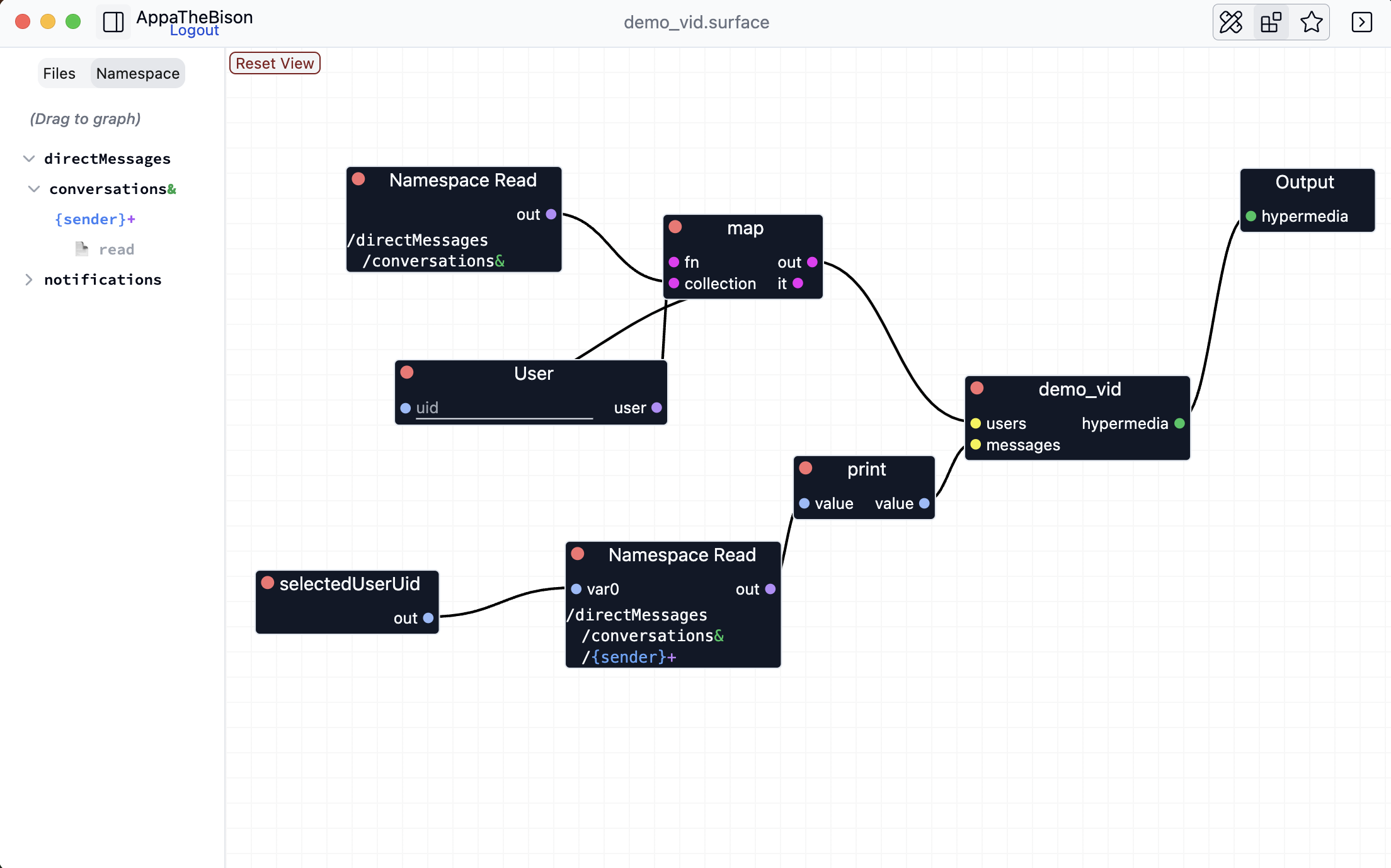

Let's implement just that and get all the people who have texted us via the directMessages protocol. Pathfinder makes this easy by allowing us to construct the logic in a node graph.

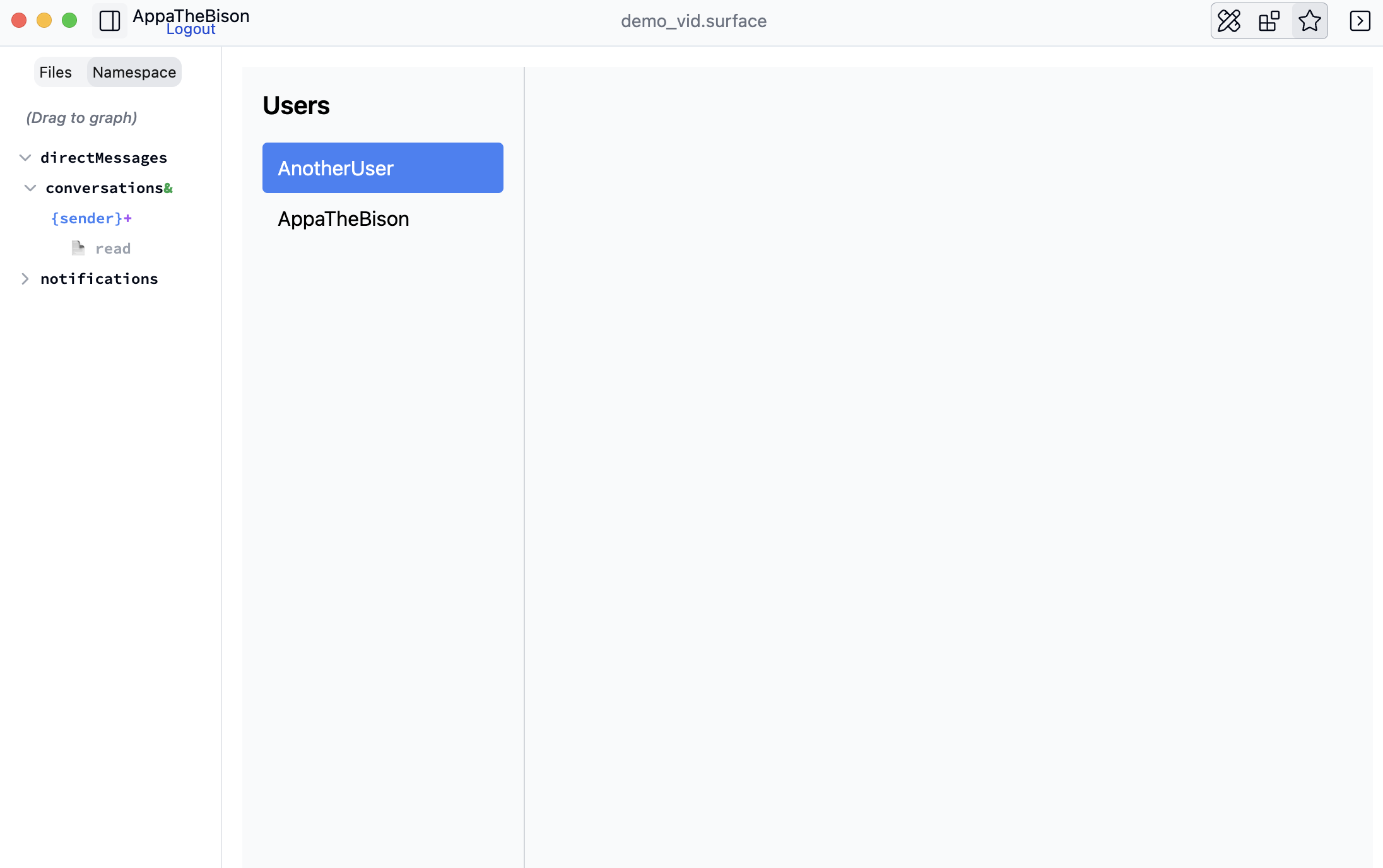

So, what we've done is great a query of all the folders below directMessages/conversations by dragging the element from the sidebar into our node graph. This node yields one output with a list of all the folder names (which, because of the protocol, happens to be the unique IDs of each user that has texted us). We took that data and plugged it directly into the "demo_vid" surface, which is set up with some hypermedia template2 that renders the list contents. Since we likely want the usernames, we implement a little bit more logic, by mapping the "network/user" node over each element returned by the query. This node takes a uid and returns an object like {username: string, uid: string}, which we can interpolate in our template.

Direct Messaging Application

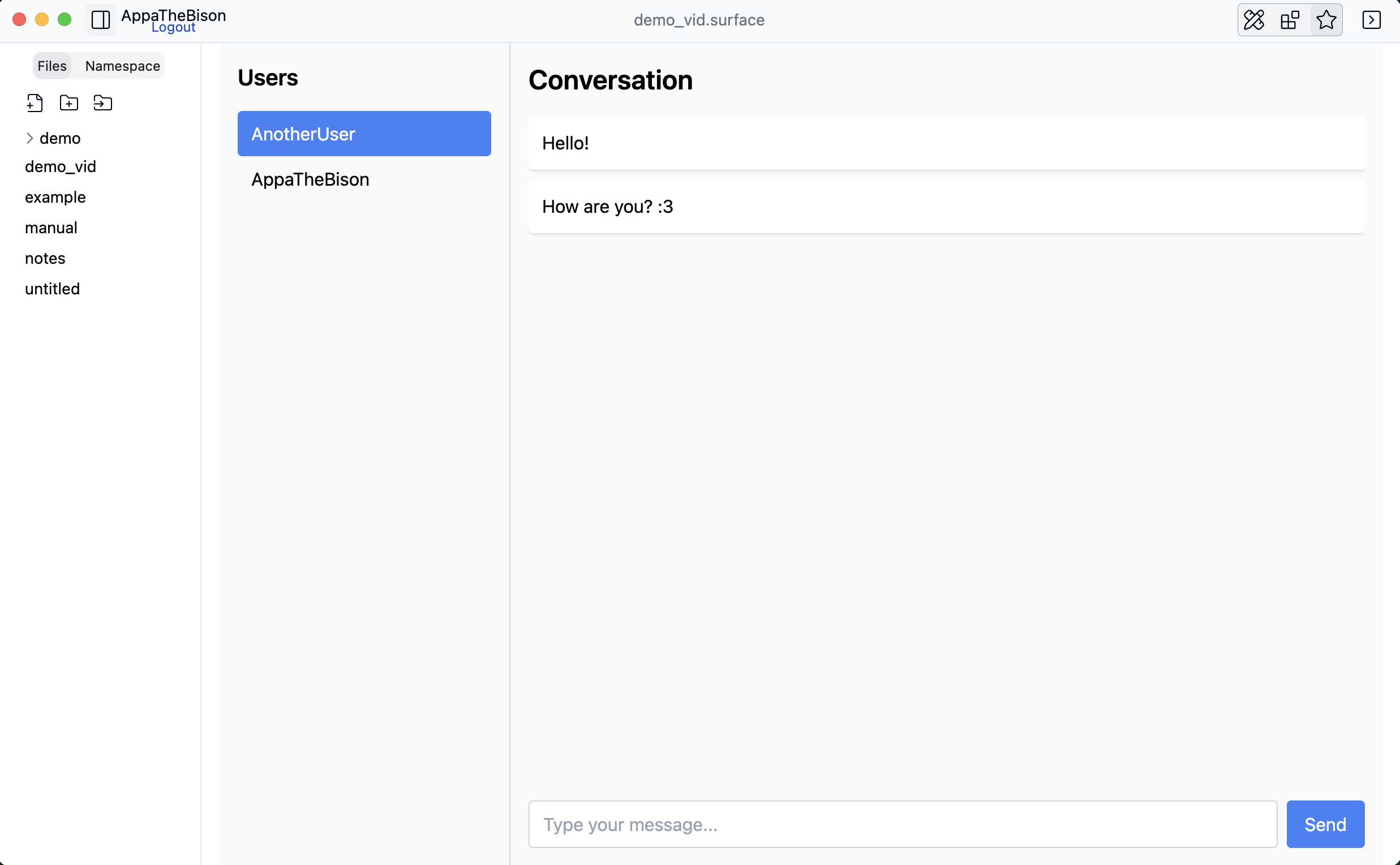

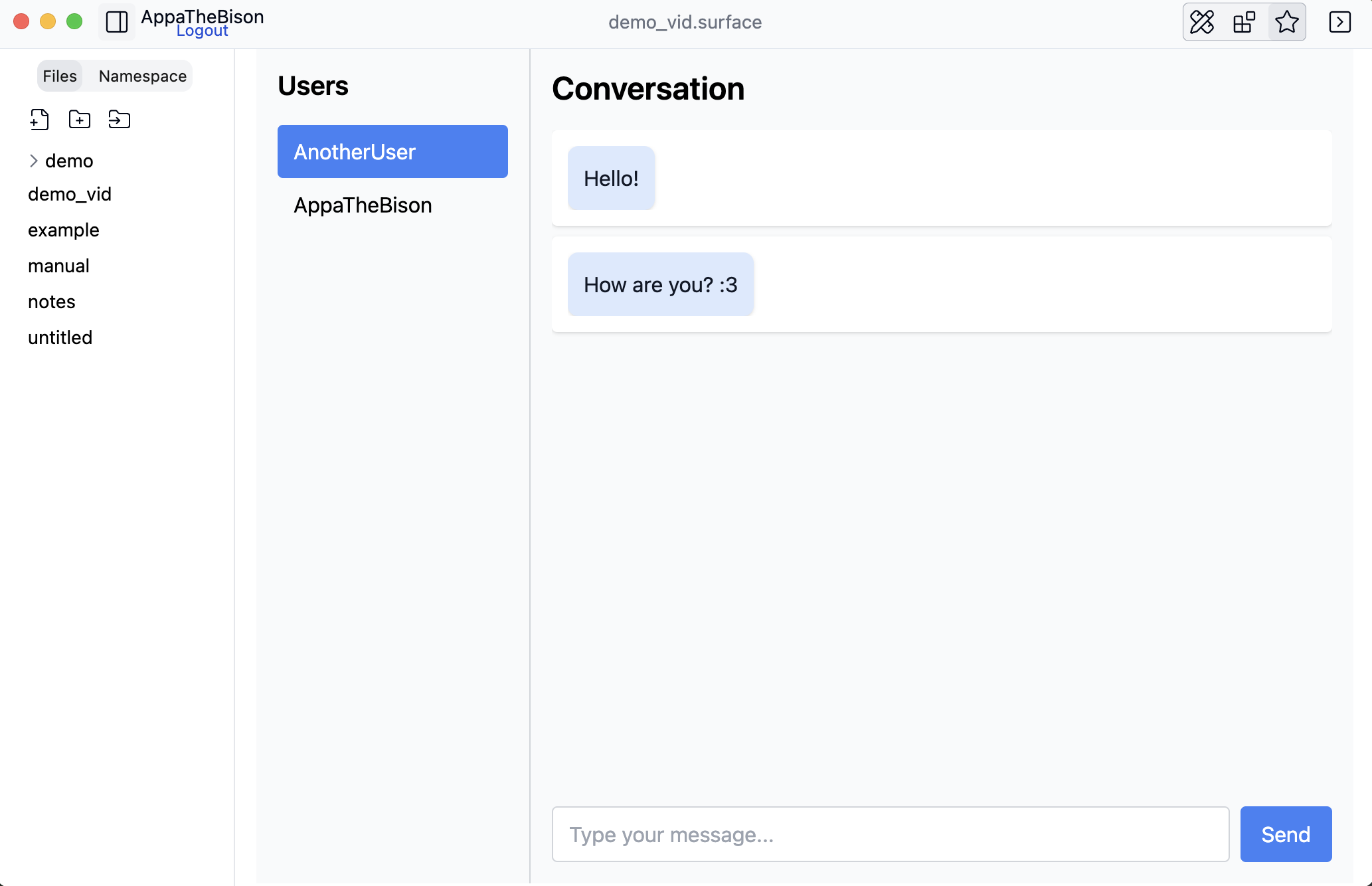

Let us complete the implementation of a client-side direct messaging application. We will send payloads like {text: "string"} and render the ones we have received. For this, we will work off of a hypermedia template which is already setup to render user names on the left and message threads on the right in a split view.

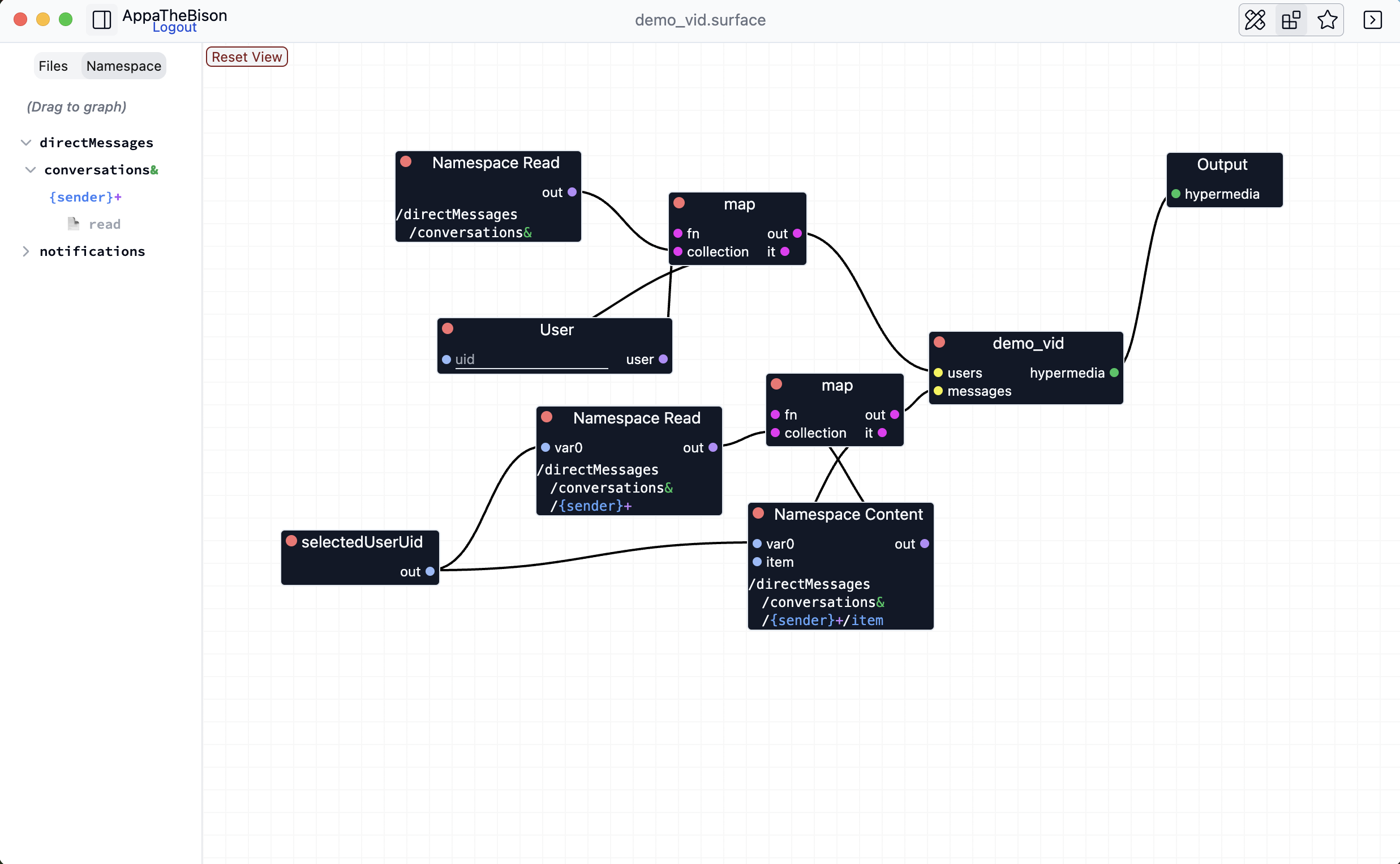

Now we can get the usernames like before. The hypermedia template exposes the uid of the selected user in our node graph through the "selectedUserUid" node.

We now need to deal with querying the message thread for the selected user. Recall how the protocol is set up, the messages we've received from another user are stored in the subfolder below conversations which is the variable part in directMessages/conversations/{sender}. So if the UID is "42" the messages would be stored at directMessages/conversations/42. Let us query it in the graph by dragging the "{sender}" part of the tree in the left pane over.

We see that the "Namespace Read" node for /directMessages/conversations/{sender} has one input socket labeled "var0," it corresponds to the one variable ({sender}) inside of our queried path. Whatever we plug into "var0" is placed in the path at the position of the variable, so if we plug the text "foo" into "var0" we'd query the path at /directMessages/conversations/foo. If we run this, we will see that it doesn't yet work. The "print" node reveals that our new namespace read seems to be returning an array of hashes. Each hash corresponds to one message that has been sent, so if the hash is bfc4 then the message from user 42 lives at /directMessages/conversations/42/bfc4. We can get the actual content stored at such a path by creating a "Namespace Content" node by dragging over the file icon at the leaf we wish to read the content from. Extending our graph to map over each message hash and read its content:

We see that the Namespace content node takes two variables, the first is to resolve the user id in the path, and the second is to resolve the message hash. We've plugged the selected uid into the first variable and the element from map into the second.

With this, we see that our direct messaging app works3!

Strictness

In the previous section, we've showcased the core idea behind this substrate by implementing a simple direct messages client. However, we have not addressed the issues we've set out to address. Particularly, our client still requires precision when sending data to users. The data must be in the shape {text: string}, otherwise our frontend cannot render it.

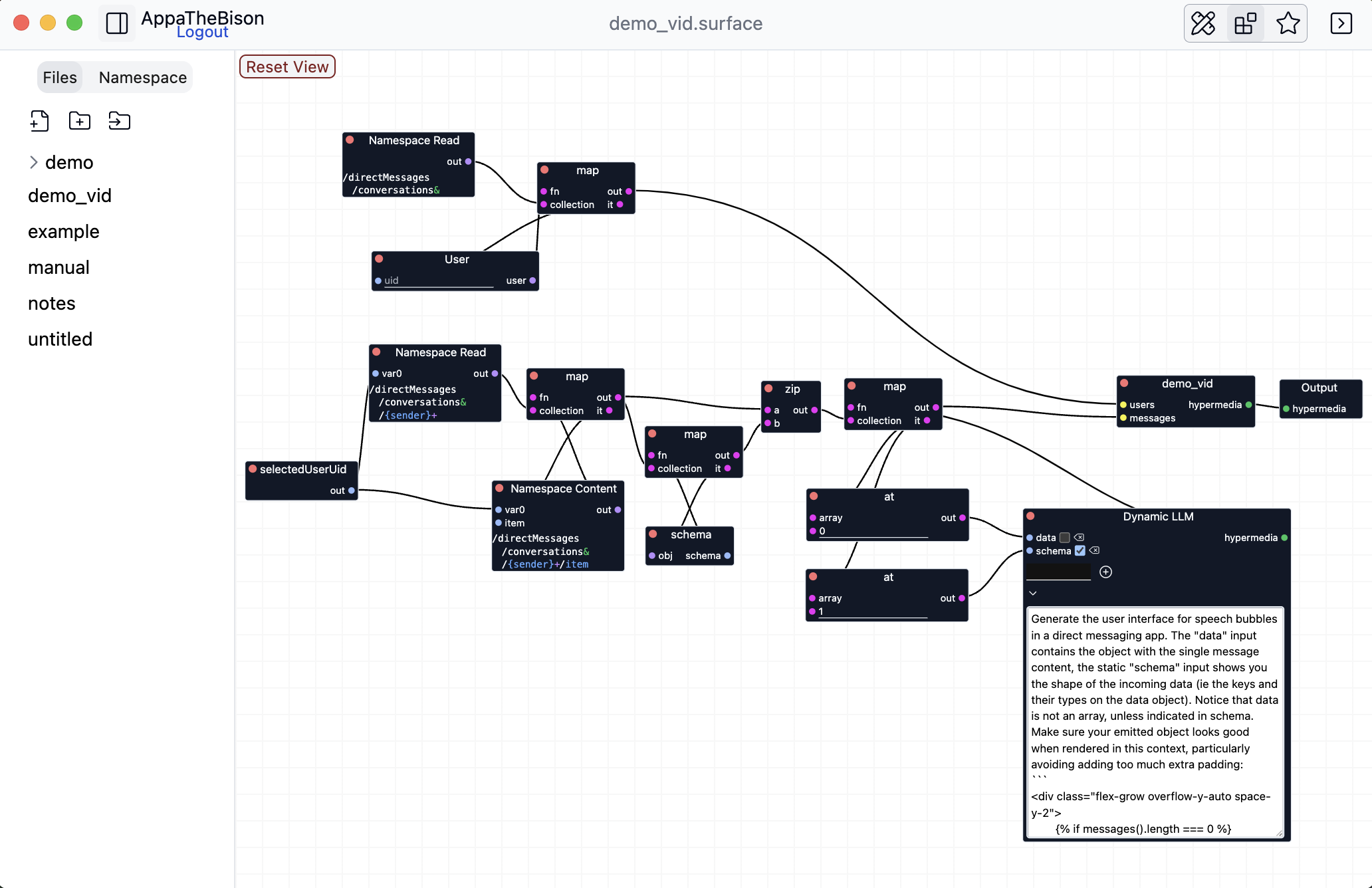

Artificial intelligence is great at dealing with fuzzy data and it can be applied to this problem here as well. The Pathfinder application has a "Dynamic LLM" node built-in. This node allows you to define a prompt, as well as a number of inputs, which work like on all the other nodes. An input can be marked as "static". When it is static, then the value the input has is interpolated into the prompt the LLM sees. Necessarily, the LLM is re-run every time the prompt or the static input changes. However, if a non-static input changes the model is not re-run. The language model is instructed in its system prompt to emit hypermedia of the same format we've used to set up the scaffolding of our previous example. Formally we can say that the Dynamic LLM node is a pure function of the prompt and the static inputs (and the random seed) yielding another function of the non-static inputs, which in turn returns hypermedia. As a Haskell signature, if you are so inclined

dynamicLLM :: Prompt -> StaticInput -> RandomSeed -> (NonStaticInput -> Hypermedia).

Our graph so far fetches a list of content stored for the selected conversations. We can be generic over the data stored by mapping over each element in this array and using a DynamicLLM to generate the user interface for the speech bubble. With a little bit of consideration, we notice that we want the model to generate a new form of hypermedia only when the shape of the input changes, that is, if the first input is {"text": "Hello"} and then it sees an input {"text": "How are you?"} the model ought to run only once, generating a generic text bubble template that interpolates the content. To achieve this we can use the built-in "Schema" node, which takes an object and returns its "shape" (e.g. transforming {"text": "How are you?"} to {"text": "string"}). Our DynamicLLM node then has two inputs, the data and the schema, the latter is marked as "static" such that the model generates a new NonStaticInput -> Hypermedia function for each differently shaped message we see.

With the given messages, we see esentially the same user interface, with slightly worse looking speech bubbles, as they are now generated by the language model. However, let's look at what happens when a AnotherUser makes her self a drawing app and sends one of those drawings to us.

Being able to receive the image from AnotherUser's paint app costs us zero effort! And even the more nonsensical data, {"ducks": 5}, we sent from the web interface is interpreted reasonably. Also, despite the interface showing loading states for each message bubble, the model was only evaluated thrice, once for {"text": "string"}, once for the image data payload4, and once for {"ducks": "number"}.

Security

Now, there needs to be access controls to different parts of the protocol file system. Even arguing that we are only sending datagrams, we must consider that they can be used to prompt inject any LLM nodes in the graph (which in turn generate arbitrary javascript) and at a minimum allow for intense spam5. For this reason, the initial release has a simple global allow list under "Platform" on this web page to which you must add any user you want to be able to send you data. Beyond that, there are then also the access controls in the paths mentioned before like directMessages/conversations&/{sender}+, where anyone can descent through conversations, but only write to the folder with their user id.

Please make sure to only add people you trust to your allow-list, this is a research prototype and not sufficiently hardened!

Unrepresentable Applications

This system is surprisingly expressive, but it can't express many applications. This is because in its current form, the client frontend is a pure function of the network state and the graph inputs (like the selected conversation). There is some statefulness in the actual frontend code, for instance, the canvas state in the drawing app. This statefulness is the same as you have access to when developing any web app. The point is that one could use localStorage to try to implement client-side statefulness, but it is clearly not idiomatically integrated into this system. In total, this means that most interactions (for instance a notification inbox) cannot be implemented.

Hypermedia Markup

A "surface" is a text document with some hypermedia markup. Each surface is available as a node in the graph, and each surface keeps track of a graph stored with it.

We've implemented a javascript framework that is designed to create complete applications and also small fragments that are interpolated in larger markup. It also natively integrates with the graph. Under the hood it works by compiling your markup to a big javascript function that hydrates the DOM, creates elements, and attaches event listeners and such things. To take the conclusion upfront, this is not the best approach. Implementing our own system was the right thing to do for this research demo since it made achieving all the interactions we wanted much more straightforward, however, the language models struggle with some of the precise usage, since obviously none of this is in their training data.

Core Syntax

The core syntax looks like HTML with JavaScript interleaved, allowing you to create the entire document in one place. The runtime relies on signals through the createSignal(), createEffect(), and untrack() methods. To create a simple click counter button:

<div>

{%:

const [v, setV] = createSignal(0);

%}

<button class="p-1 border rounded" onclick={() => {setV(v() + 1)}}>Clicked {v()} times</button>

</div>

<!-- v, setV dropped here -->

Hopefully, most of this is intuitive, we use the {%: ... %} syntax to create a javascript block. The outer div is important since it defines the scope of the javascript block. As indicated, the variables v and setV are dropped outside of the outermost div. We interpolate the variables into the markdown with {expression} and bind to the event listener as shown. Tailwindcss classes are available everywhere.

There is a special syntax for loops and if statements inside of the markup, which works as you'd expect.

{% for item in items() %}

<!-- Loop content -->

{% end %}

{% for item, index in items() %}

<!-- Loop content -->

{% end %}

{% if condition %}

<!-- If block -->

{% elif otherCondition %}

<!-- Optional elif -->

{% elif otherCondition2 %}

<!-- Optional elif -->

{% else %}

<!-- Optional else -->

{% end %}

It is possible to create reusable components simply by giving an HTML element a component name using the @Name syntax and defining component props using a leading *. The HTML element this syntax appears on is the outermost element of the component:

<div>

<div @BackgroundSpan *color>

<span style={`background-color: ${color};`}>

{@ children}

</span>

</div>

<BackgroundSpan color="red">First</BackgroundSpan>

<BackgroundSpan color="green">Second</BackgroundSpan>

</div>

The element marked with @Name does not appear in the DOM. Here we've used the {@ expression} syntax to interpolate the special children variable into the markup. This syntax can be used with any expression, and is the primary way of interpolating hypermedia generated from the graph into the markup. There is also a shorthand {# slot} expression, which exposes an input on the surface's node and interpolates the connected hypermedia at its place.

Interacting with the graph

There are two directives to interact with the graph $pull(name: string) and $push(name: string, type: string). We are sticking with the Svelte convention of marking functions that have special compile-time transformations with a dollar sign. $pull(name) exposes a slot on the node in the graph and returns the getter part of a signal which evaluates to the data gathered from the graph. $push(name, type) returns a full signal. It is exposed as an "input" node with the given name in the graph. You can use the setter part of the signal to "push" data to the graph, causing it to re-evaluate. The getter part can be used to access the data you've set in other parts of your code for convenience. The "type" parameter is just used to color the input slot and doesn't involve any type checks and is optional.

Special syntax

Any element accepts the ref prop which takes a function where the first argument is the HTMLElement of the referenced element in the DOM.

Runtime

You can interact with the backend programmatically as well as using the send(to: string, path: string, data: any), list(path: string), and content(path: string) functions. Note that path shouldn't include any of the access modifiers (ie + and &), and you can use the @ symbol to reference your own uid, for instance: directMessages/conversations/@. Also note that in all places in the API, but not in paths, where you are asked for the UID of a user, you can also enter the @username.

Conclusion

The hope with this project is to explore how we can simplify how networked applications are built. We've stripped back the structure of the backend maximally and used a machine learning model to fill the one gap that usually demands such structure. While the applications that can be built are rudimanatry, and this has been disclaimed enough throughout, it is striking how much the backend could be simplified.

Particularly, it seems that there really was only one piece that needed to be replaced with an AI model for all the complexity to melt away. We could also argue that this project shows that user interfaces are the bottleneck, in the sense that it is easy to transfer data between machines and build the application logic, but once you go ahead and implement an intuitive and interactive interface to this data and logic, any straightforwardness in the backend you allowed yourself punishes you with exploding complexity in the frontend.

It is not clear how to implement certain centralising structures (particularly those which require compute and expertise like content feed generators), but here we have taken a first step of religiously re-considering how our applications are designed in the age of machine intelligence.

Footnotes

-

This is knowingly extremely limited and cannot express most applications. ↩

-

How the hypermedia language and all of this works is documented below. ↩

-

Sending is implemented by using the

sendmethod inside the hypermedia template which takes a user to send to, a path, and a JSON data payload. Be careful to also write the message you've send to someone else into your own copy of the file system (using asendpointed at your self), this has implications for transactionality. ↩ -

We don't even know what the LLM chose to send (!!) ↩

-

Email has no access controls per se and requires deploying massively sophisticated algorithms to keep your inbox somewhat useable. ↩